Misinformation. It’s not always a matter of deliberate falsehoods. In the context of local government performance—particularly in complex reports about Special Educational Needs (SEN) provision—misinformation can be accurate but incomplete, out of context, or even just plain wrong. In the case of Surrey County Council, we believe this is not simply an issue of data errors but of how performance management, scrutiny, and oversight are at risk of being compromised by incomplete or poorly presented information.

As a local authority, Surrey has a critical responsibility to ensure the information it produces is accurate, transparent, and supports effective governance and accountability. However, as we’ve highlighted in our last four reports, we believe some of the information Surrey has publicly shared (and also – notably – not shared) about its SEND provision contains serious flaws. In our view, these flaws have led to a breakdown in scrutiny and oversight, creating a real risk of misguiding strategy and undermining governance structures. Our analysis suggests Surrey’s focus on process-driven metrics—especially timeliness—may have masked significant declines in performance, with a number of these issues seemingly slipping under the radar due to the incomplete presentation of data for scrutiny

At Measure What Matters., we believe that for local government to function effectively, data for decision-making must be open-sourced, verifiable, benchmarked, and timely. By contrast, Surrey’s approach to reporting data, in our view, has lacked both openness and integrity, leading to clear confusion among those responsible for oversight of services. Over the past few weeks, as these issues have emerged, we’ve seen instances of entirely incorrect performance statistics being publicly cited. Even more troubling, however, are the ill-conceived and pervasive narratives that have taken root—stories built on what seems to be a fundamental misunderstanding of the true nature of the evolving crisis

Here are a handful of the types of misleading statements we have seen published in relation to the issues being discussed;

“Often we do find that what the parents think is in the best interest of the child isn’t necessarily what the experts think is in the best interest of the child”

Radio Interview, Leader, Surrey County Council, Aug ’24

“…Surrey is a special case requiring urgent and increased government action. Nationally SEND education affects around 18% but in Surrey this grows to a staggering 39%, double the national average”

MP contribution, Parliamentary debate on SEND, Sep ’24

“So, matching desired provision, with ongoing assessed need is an ongoing challenge for the additional needs and disabilities services”

Scrutiny Committee comments, Senior Manager, Children, Families and Lifelong Learning Directorate, Jun ’24

“Well, actually, this isn’t really a financial issue… this is not necessarily about the money”

Radio Interview, Leader, Surrey County Council, Aug ’24

“…The situation (in Surrey, relating to EHCPs) was recognised and effective action was taken to restore services which are now performing well above the national average”

Social Media response, County Cllr., Sep ’24

“Since the implementation of our timeliness recovery plan, our timeliness has considerably improved. In-month timeliness to issue plans is now above 70% and as been since May of this year”

Published letter to MPs, Leader, Surrey County Council Sep ’24

“Surrey’s rate of complaints for 2023 was high than usual (but) we are already seeing the rate of complaints fall. However I hope you will concede that the absolute number of complaints in a local authority serving one of the largest populations in the country will always be higher than the average”

Published letter to MPs Leader, Surrey County Council, Sep ’24

“…That is in addition to the fact that that figure of applications for EHCPs is still rising I believe”

Scrutiny Committee comments, County Cllr. Jun ’24

Ironically, the confusion has now resulted in finger-pointing, with Surrey County Council’s responses seemingly singularly focused on deflecting blame. Unfortunately, this defensiveness is perhaps inevitable when data surfaces that appears to contradict the deeply entrenched official narrative. Meanwhile, our concern remains that, despite the visibility of what appear to be worrying trends in performance, the critical issues are still being left unaddressed. As a result, we have to assume that governance continues to falter as these problems persist unchecked.

Uncovering the Truth: How Is Surrey Really Doing?

For those closely following this debate, you’ll know that at Measure What Matters, our mission is to make essential information truly accessible. We believe clear, accurate, and verifiable data is fundamental for building trust and driving accountability in local government. By accessible, we mean genuinely clear. The issue we’ve consistently encountered is that while a wealth of data is available, it’s often buried in massive spreadsheets, stripped of context, full of jargon, and cluttered with outdated terminology. According to the “three-click rule,” this data is nearly impossible to find, and even when located, it’s challenging to interpret or draw meaningful conclusions from.

We have previously reported on what we see as Surrey County Council’s fixation on timeliness in delivering SEND provision—specifically, the percentage of EHCPs they are issuing within the statutory timeframe of 20 weeks. On the surface, this seems like a commendable focus, especially given that their considerable administrative problems and delays in securing expert input had led to a growing backlog over the past couple of years. However, their eventual progress against this process-driven metric—”how many plans are being issued within 20 weeks?”—is repeatedly being presented as evidence of service improvement for families and children. A closer examination, though, soon suggests that this focus may well have distracted from more critical issues around service quality and effectiveness, with potentially disastrous impacts on children and families. For instance, what is the actual average start-to-finish time for a child being assessed for an EHCP and how has this changed? And how many of those plans being issued are later appealed? These questions point to deeper concerns that appear to have been largely overlooked (until now).

Additionally, much of the data presented for scrutiny in Surrey’s official performance reports is unsourced, missing crucial context, and lacking key indicators of comparative performance and trends. This highlights a deeper issue: the fundamental question of “how are we doing?” seems increasingly difficult to answer and is often misunderstood or misinterpreted. It’s hardly surprising, then, that the recent confusion, concern, and even outright denial in response to the information we’ve been publishing has surfaced. People are asking, How can this be? This isn’t how the information was reported to me. Why do the numbers look different? What is really happening here?

Take this example:

Let’s say, for argument’s sake, that in 2023, 26% of Education, Health, and Care Plans (EHCPs) were issued on time, but by 2024, that figure has jumped to 61%. Great news, right? It looks like the problem has been fixed.

But let’s dig a little deeper. Suppose in 2023, the average start-to-finish time for a child needing additional support was 25 weeks—five weeks beyond the statutory 20-week timeframe. Fast forward to 2024, and now imagine that start-to-finish average time has actually increased to 28 weeks. Wait, what? How did that happen? You said we hit 61%?! Well, while more plans are being issued on time, the 39% still outside the timescales may now be delayed much longer, stretching the overall start-to-finish time.

So, even though 61% of children are receiving their plans within the 20-week window, are things really improving? That’s up for debate. For instance, let’s say 30% of those families receiving plans on time are now appealing the decision because the plan they’ve been issued is incomplete or insufficient. And how long do these appeals take? Well, for the purposes of our example let’s guess an average of 52 weeks or more… Worse still, 98.3% of those appeals are upheld, meaning in almost every case, when legally examined, it’s agreed the original plan wasn’t good enough. So, technically, a plan was issued within 20 weeks, but in reality, the child is waiting an additional year for a tribunal to order suitable provision. So really, for that child, the start-to-finish time is now extended to 72 weeks+.

Hang on. What about internal quality metrics? Surely they would be identifying problems with these plans before they end up heading for tribunal? Well, Internal quality assurance reports actually already show only 22% of plans meet acceptable standards.

So. Is this actually progress? We’re issuing some plans faster, but others are taking much longer. Meanwhile, the faster-issued plans are being appealed more frequently, resulting in far longer delays for children needing support. Hmm. It seems we might not have quite hit the mark here.

This example, though tongue-in-cheek and based entirely on hypothetical figures, illustrates a critical point: what you choose to measure matters. We spotted these poignant comments from case officers, collated as part of the recently commissioned Additional Needs Review, when discussing issues with timeliness and availability of Educational Psychologists with a focus group;

“We were trying to make the numbers look better. We were behind on the whole load, but the newer ones were being seen before the older ones to make the numbers look better”.

Surrey County Council Case Officer ’24

“Their main job was to work on the backlog of EP cases to make sure these plans were then issued, and they mainly stick to these statutory deadlines but it seems to be at the cost of everything else”

Surrey County Council Case Officer ’24

Cited from: Findings Report; Additional Needs and Disabilities’ Parent and Carer Experience Task Group, September ’24

Its evident. Focusing on one metric without carefully considering the broader context, or the behavioural consequences can quickly mask deeper issues and give a highly misleading impression of improvement.

In our opinion, based on what we have reviewed, Surrey’s approach to handling, interpreting and reporting on performance data raises serious questions. By having failed to provide complete and transparent data in an accessible format to Councillors and Committees, the Council risks undermining its scrutiny and oversight processes, with significant consequences for governance. Put simply, you can’t fix the problem if you aren’t being shown where the cracks are. And let’s not forget—we’re not debating minor issues like grass-cutting schedules. We’re talking about critical information that determines the effectiveness of oversight of strategies and decisions related to Special Educational Needs provision for vulnerable children—life-changing decisions that, if mishandled, cause profound harm by delaying or depriving those children of the essential support they need.

Case Study 1: Missing information leading to misrepresentation of SEN Demand

Education & Lifelong Learning KPI Table, July ’24

Image of Surrey County Council Education and Lifelong Learning Key Performance Indicators, Performance Pack, September ’24

In our view, one of Surrey’s key failures in effective performance management is the persistent confusion and misrepresentation of demand for SEND services. Recent responses to our reports highlight how deeply data on SEND needs has been misinterpreted and misunderstood, both within Surrey County Council and by those defending its approach—largely due to the absence of critical information being reported. In our opinion, this lack of transparency and clarity has only served to exacerbate matters, making it difficult for anyone to grasp the true scale, or context of the issues within Surrey County Council’s SEND provision.

For instance, a local MP, while laudably calling for clearer and more accurate information, grossly (and almost certainly inadvertently) misrepresented the facts by claiming that 39% of all children in Surrey had SEN support needs—more than double the national average of 18%. This inflated figure was used to justify the argument that Surrey was a “special case” in need of additional government support and resources.

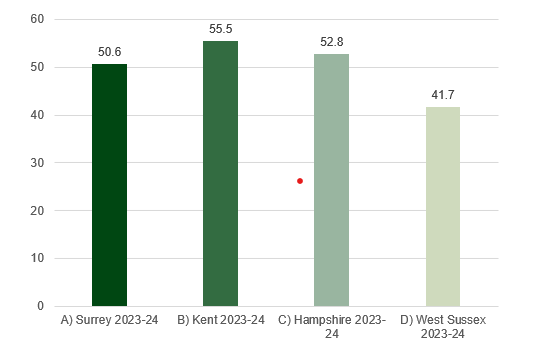

However, the actual official published comparative figure for identifying children with identified SEN needs requiring additional support in Surrey is 19.5% (https://explore-education-statistics.service.gov.uk/find-statistics/special-educational-needs-in-england) and only marginally higher than the national average of 18.7%. This open sourced DfE data also highlights the split of support; how many of those children are supported by EHCP provision (5.3%), and how many are supported with provision ordinarily available in schools (14.2%), which again, is broadly comparable to the national position. However – please lets not jump to judge here. There is a lot of data flying about. Surrey-published report titled ‘Surrey Inclusion and Additional Needs Strategy 2023-2026’ (Slide 1 (surreycc.gov.uk)), states how Surrey has a population (38.7 per 1000) of children with an EHCP which we suspect is where the misquoted 39% has come from. Which is, of course only 3.87% (not 38.7% or indeed 39%) and actually, not directly comparable to the national figure cited anyway because it doesn’t include the children who have identified SEND needs but are not supported with an EHCP. Hence the significant difference in the data points. Furthermore this data has since been updated, (taken from the High Needs LA Benchmarking tool found here: High needs benchmarking tool – GOV.UK (www.gov.uk)).

This table and supporting graph is extracted from a current comparative view of children (2-18 yrs) being supported through EHCP Provision from the High Needs Benchmarking Tool;

However, the critical point here isn’t about what appears to us to be a genuine mistake by a concerned and well-intentioned MP, or the misplacement of a figure. It’s that this reflects a deeper problem: the complexity of SEND data can easily lead to misguided conclusions when the information is not properly and consistently reported from verifiable sources. It just shouldn’t be this difficult to get a clear picture of the issue. It’s undeniable that demand for SEND provision has risen nationally over the past decade, placing significant, sustained long-term pressure on the system. These are, without question, pressing issues that require national attention.

However, within this broader context, our concern lies in whether the evident, substantial local variations in how these challenges are being managed are even being properly identified. This raises critical questions about where, how, and to whom these system pressures are being transferred—something Surrey clearly exemplifies. In our opinion, these local dynamics are not being adequately recognised or challenged in reporting, which is a significant concern

We’ve already demonstrated that Surrey’ County Council’s pervasive rhetoric—parent driven demand for EHCPs is growing exponentially year on year—is, in the case of Surrey, both misleading and contradictory. A simple review of their own internal minutes reveal that Council officers are now reporting that demand for EHCP assessments has flattened and is now even beginning to decline. But as you can see from the internal performance table shown above, trended data about demand on services does not appear to be being reported into key committees so is it any surprise that this picture is so fundamentally misunderstood?

By continuing to publicly frame Surrey as simply buckling under an unmanageable, ever increasing wave of new requests, it appears that the focus is shifted away from the real issue: a noticeable, concerning, localised deterioration in quality of services being provided. It is our opinion that at this point, the available data no longer points to a large Local Authority grappling with overwhelming parent-led demand, but instead is indicating systemic, and perhaps even cultural issues with how services are being managed within Surrey County Council.

But most important of all – it is our opinion that the critical data required for those charged with oversight to enable comprehensive assessment of the situation is simply not being provided.

This misrepresentation is leading to a significant misunderstanding by those responsible for oversight and makes effective scrutiny nearly impossible. If those charged with governance continue to believe, in general terms, that escalating demand is the root cause of all the problems, rather than recognising the alarming indicators of rapidly declining service quality, they are likely to misdirect resources and overlook the real issues. The fact that this narrative is still misunderstood and misreported underscores the very problem we’ve been addressing—a lack of published, clear, consistent, and benchmarked performance indicators. It’s possible to achieve this level of clarity. It must be done.

Case Study 2: Failure to Contextualize Key Information

Another critical problem is Surrey’s failure to provide context for key data. This leads to serious metrics being glossed over or misunderstood, ultimately contributing to a breakdown in governance and scrutiny.

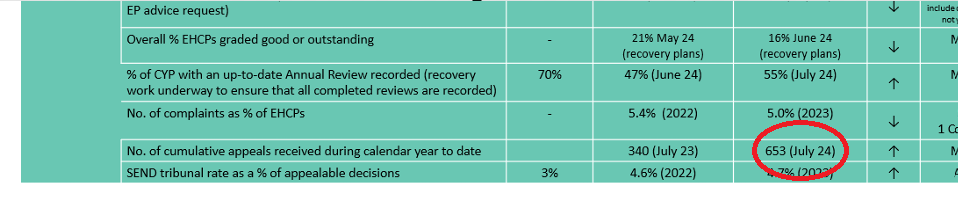

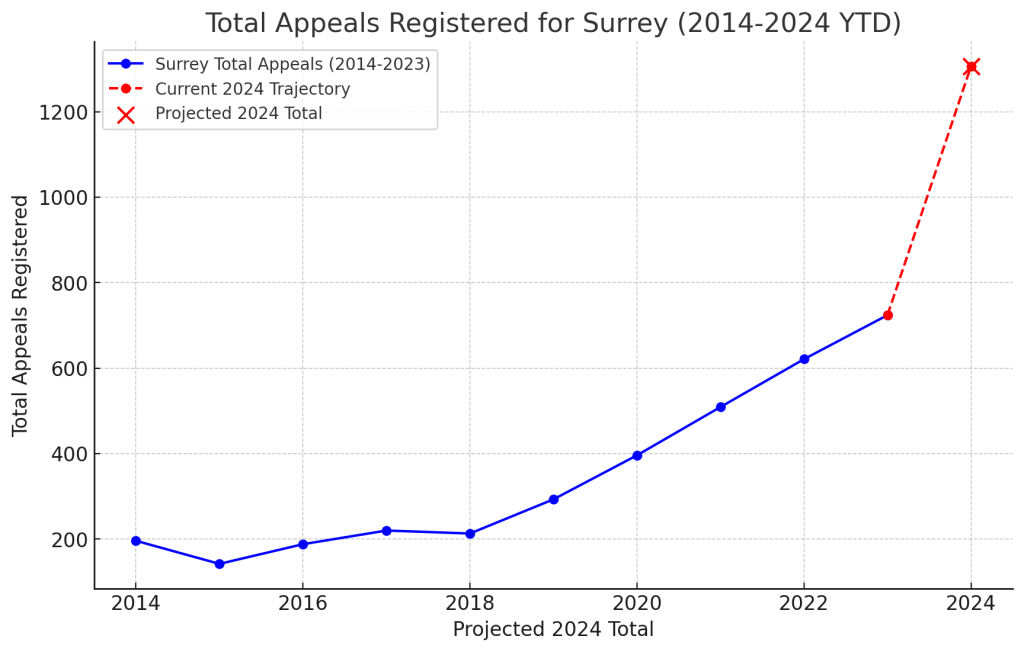

Take the Cumulative Appeals statistic from Surrey’s performance management table (see Table 1). The table includes a single row showing the number of cumulative appeals year to date, but without any further context or supporting analysis. This makes the figure appear like just another number, when in reality it is a critical red flag that should be demanding urgent intervention.

While the table shows the raw number of appeals, it fails to contextualize the fact that, according to this data, appeals have nearly doubled over the past year. Nor does it contextualise that Surrey already had the second highest volume of total appeals in the country last year, and their appealed decision rate was, already, nearly double the national average at the close of last year. Without this context, it’s very easy to overlook the true scale of the problem—one that appears to indicate startling differences in relation to lawful decision making in Surrey in relation to SEND provision.

When the cumulative appeals data is projected forward, and visualized (as shown in the graph below), the magnitude of the problem becomes instantly clear.

This is why context matters. Without proper context, Surrey’s data is both incomplete and misleading, inevitably undermining the scrutiny process and impeding stakeholders from identifying where the true performance failures lie.

What Needs to Change: Open-Sourced, Verifiable, Contextual Data

In our view, Surrey’s current approach to performance reporting not only lacks transparency and accuracy but also undermines public accountability. To restore proper oversight, Surrey must ensure that the data they produce is both accurate and timely, with full transparency. This would enable genuine scrutiny, rather than relying on selective metrics that obscure the true picture. If Surrey is serious about improving oversight, they must first overhaul how their KPIs are agreed upon, tracked, and monitored

Here’s how:

- Open-Sourced and Verifiable Data:

- Surrey must make performance data publicly available and subject to independent verification. Transparency is key to ensuring the data is accurate and free from subjectivity or selective reporting. Without this openness, the information remains difficult to verify and trust erodes

- Contextualized and Benchmarked KPIs:

- KPIs should be framed within the context of national benchmarks and show clear trends over time. This would enable all stakeholders to gauge whether performance is improving or deteriorating, providing a clearer picture of local challenges compared to broader trends

- Measurement Approach:

- Surrey must shift away from superficial, process-driven metrics like processing speed, and instead focus on more holistic measures, such as start-to-end times that reveal how long it takes children to actually access support. Reporting should also account for bandwidth, offering insight into the variability of experiences. This comprehensive reporting would better capture the real impact on children, families, and schools, highlighting service gaps that need to be addressed.

Conclusion: Data Integrity, Governance, and Accountability

It is our opinion that Surrey County Council’s current approach to performance management and oversight, particularly in the context of SEND provision, is deeply flawed. Misinformation—whether through misinterpreted, incomplete, or poorly contextualized data—has significantly impacted the ability of those responsible for oversight to hold the Council accountable. Furthermore, we can see evidence of how this lack of understanding and over simplification has fuelled the spread of misleading, and in some cases, highly damaging narratives in discussions surrounding SEND provision.

The selective presentation of data not only risks misguiding strategy but also contributes to a serious breakdown in governance. By apparently overlooking critical factors such as those we have highlighted in our series of reports about SEND provision, Surrey risks harming those most in need of support. This approach also leads to widespread misunderstanding, making meaningful scrutiny nearly impossible.

For Surrey to regain public trust and improve outcomes, it must embrace transparency. Data must be open-sourced, verifiable, contextualised, and benchmarked against national, and comparison group performance. Only by overhauling its approach and attitude to performance reporting can Surrey provide an accurate reflection of its service provision, enabling stakeholders to make informed decisions and ensure accountability.

With this in mind, one final reflection. The Local Government Association (LGA) and County Councils Network (CCN) recently commissioned a report exploring “the need for fundamental reform of the SEND system in England” Towards an effective and financially sustainable approach to SEND in England | Local Government Association. We intend to provide a more detailed review of this report in the coming weeks, as we believe it has considerable implications for the future accountability and oversight of SEND provision for Local Authorities, but also wider considerations for governance in Local Government as a whole. However, it seems poignant to include this particular quote from the findings section of the report:

“LA Leaders questioned whether it was appropriate to have a judicial body, not only making retrospective judgements on the rights and wrongs of public bodies’ decision making practice, but taking active decisions about the educational provision and placements of children and young people…”

Towards an Effective and Financially Sustainable Approach to SEND in England, ISOS Partnership, July 2024.

In our view, the ISOS Partnership’s statement serves as a clear and deeply concerning warning. The suggestion to reduce judicial scrutiny of public bodies’ decision-making is unusual and raises serious alarms, especially given the issues we see both nationally and specifically within our work looking at Surrey County Council. As you’ll know, at Measure what matters, we are focused on improving governance and accountability in Local Government. In our opinion, Judicial oversight is a crucial check on public bodies, ensuring they comply with the law and protect individuals’ rights—particularly when internal oversight mechanisms are failing. Removing or diminishing this scrutiny is rare and risky, as it threatens to erode accountability and transparency, potentially allowing public bodies to operate unchecked.

Given the significant gaps in oversight and evidence of potentially flawed decision-making that we have identified, we can understand how any discussion of reducing legal protection would strike fear into the hearts of families who evidently already struggle to secure the critical support their children need. We’ll take a closer look at this issue in a follow-up report.

What you choose to measure, matters. Without accurate, timely, and contextualized data, effective oversight is impossible. Without oversight, meaningful scrutiny cannot occur, and without scrutiny, governance breaks down. In our view, it’s time for Surrey County Council to take responsibility, provide a complete and transparent picture of its performance, and begin the crucial work of restoring trust and confidence in its ability to serve the community.

Measure what matters.

Leave a comment